#

by Hailey Still and Carrie Winfrey

****

Here we outline the User Testing process and plan for the F-Droid app store for Android. The key aims of F-Droid are to provide users with a) a comprehensive catalogue of open-source apps, as well as b) provide users with the the ability to transfer any app from their phone to someone in close physical proximity. With this User Test, we are hoping to gain insights into where the product design is successful and what aspects need to be further improved. Main goals are obtaining a baseline user performance and identifying potential design concerns regarding ease of use. An additional goal is to promote F-Droid as an alternative to the Google Play app store.

The usability test objectives are to determine usability problem areas within the user interface and content areas. Key focus points include:

- App navigation: failure to locate functions, excessive clicks to complete a task or failure to complete task

- Presentation errors: selection errors due to labeling ambiguities

The usability test also aims to gain a deeper understanding of our users, what needs they are hoping to meet by using F-Droid, and a basic user-satisfaction level. Our participants for this round of testing will represent a range of ages, backgrounds and technological literacy levels. All tests will be performed with guidance and support of a test facilitator.

Methodology

Users will be tested with the assistance of screen and audio recordings, which are to be analyzed post-test. A pre-test questionnaire will record basic demographic data and a post-test survey will gather insight on overall usability experience. The test will include a standard task completion portion as well as a desirability card sorting (to capture qualitative data on user experience).

Participants will be asked to fill out a demographic and background information survey. The facilitator will explain that the amount of time taken to complete the task will be measured and that they should remain focused on the task. The participant will read the task description, ask any questions they may have and begin the task. Measurement begins when the participant begins the task.

Participants will be encouraged to think aloud. The facilitator will minimally assist the participants during task completion. The facilitator will observe and enter user behavior, comments, and actions.

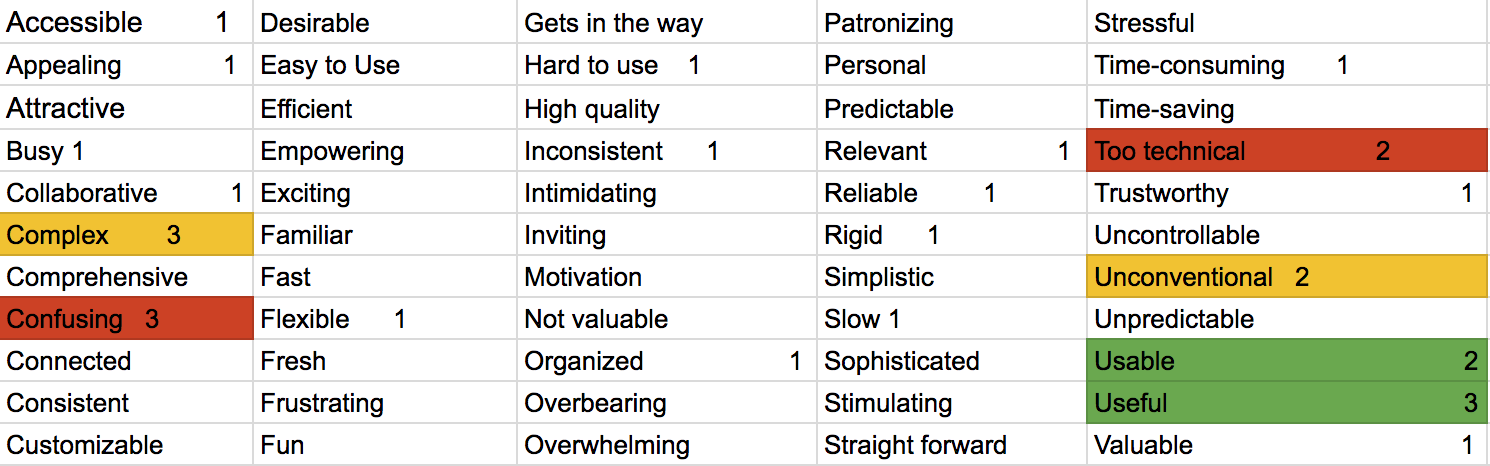

After all task scenarios are attempted, the participant will complete the post-test desirability card activity and survey. This activity will comprise of asking participants to choose 5 cards they feel reflect their overall experience and elaborate on their choices.

Usability Tasks:

The tasks were randomly administered, with the exception of the “Last Task” which was always administered last.

TASK A. Search for an app that you would like to download. Install the app you have selected.

TASK B. There is an app that needs to be updated. Find the app and install the update.

TASK C. Find a game app that looks interesting to you. Install the app.

TASK D. Search for a crossword puzzle app within the games category.

TASK E. If you had no internet, how would you download an app?

TASK F. Your friend emails you a link to a collection of their favorite apps. Open gmail and click on the link they have sent you. Add the collection of apps that your friend emailed to you to F-Droid. Locate an app called Habitica from the collection you just downloaded.

LAST TASK. Add the collection of apps displayed on the computer to F-Droid.

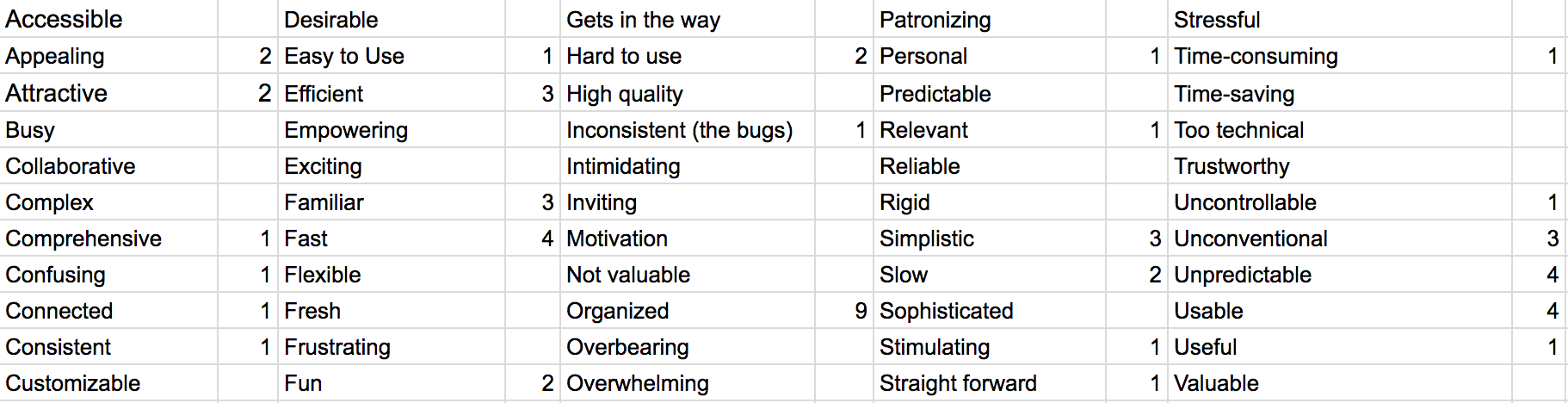

Desirability Cards:

Metrics

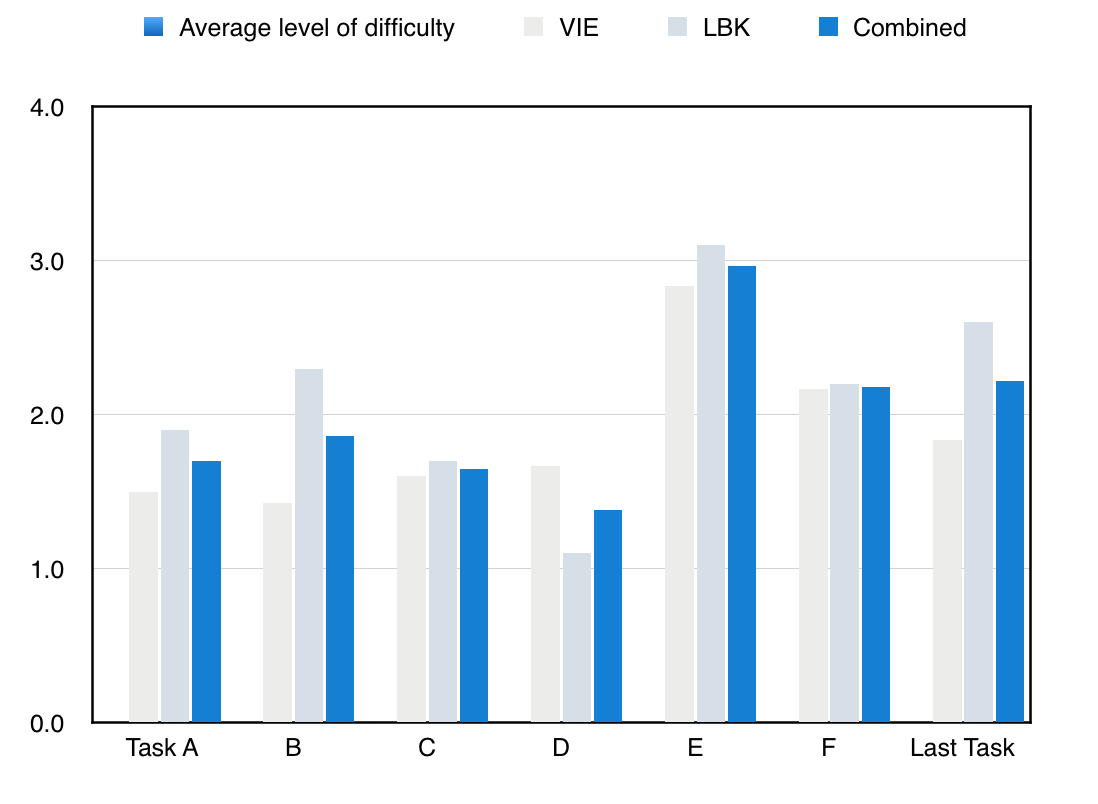

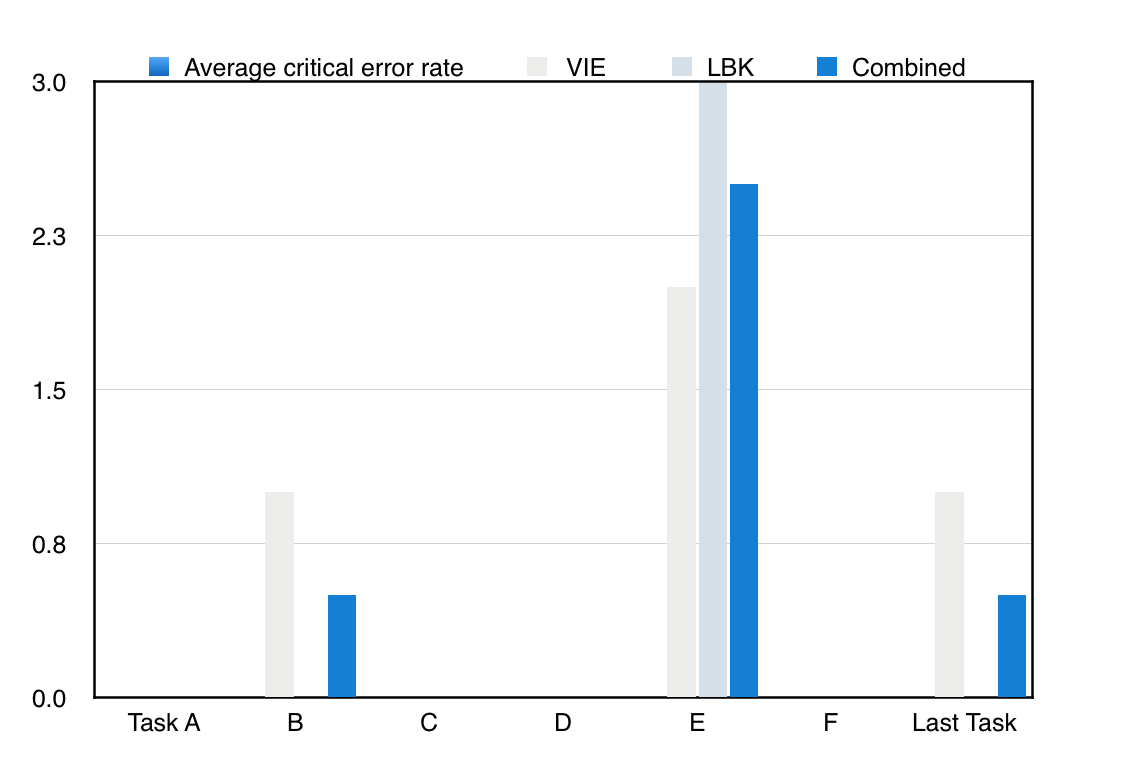

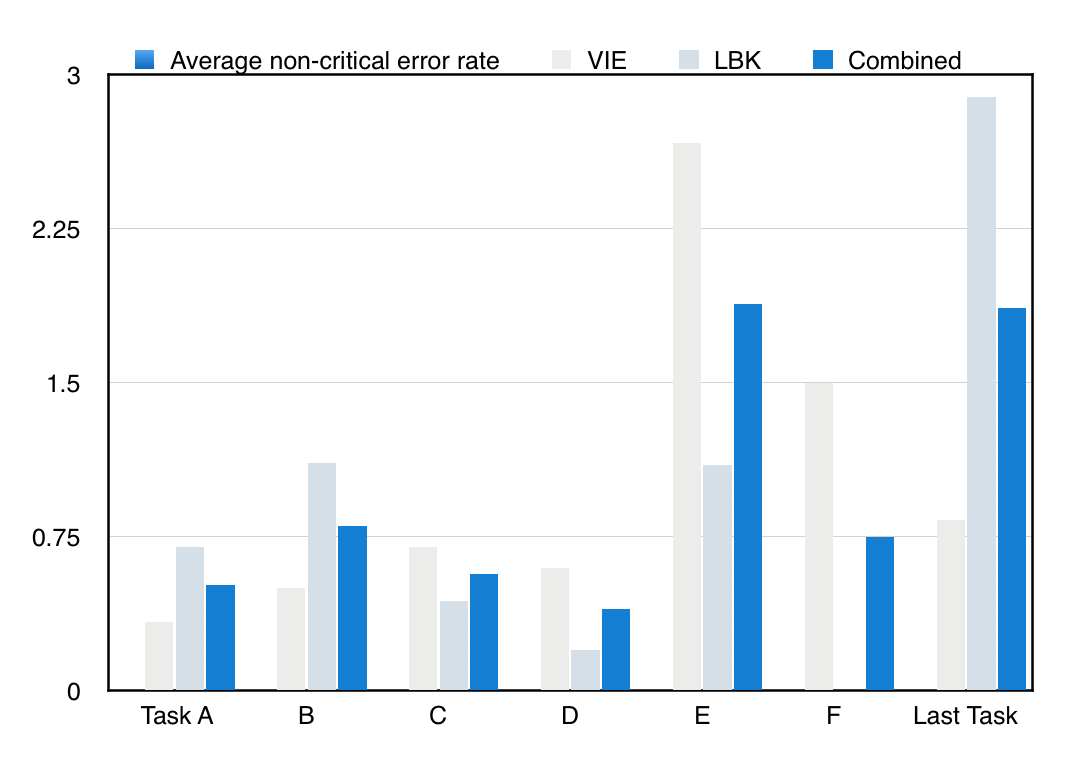

Tests hosted in two locations, Vienna, Austria and Lubbock, Texas. These charts represent the combined metrics for all tests at both locations.

Level of Difficulty:

The participants were asked to rate the level of difficulty per task as they completed them.

Average Critical Error Rate:

Critical errors are reported as errors that result in failure to complete the task. Participants may or may not be aware that the task goal is incorrect or incomplete. Independent completion of the task is the goal. Help from the test facilitator or others is to be marked as a critical error.

Average Non-critical Error Rate:

Non-critical errors are errors that the participant recovers from alone and are not such that the participant can no longer complete the task. They can include errors such as excessive steps taken to complete a task or initially using an incorrect function but recovering from that incorrect step.

Non-critical errors do not include exploratory behavior. Exploratory behavior includes errors that are

off task from the main task attempting to be completed.

Desirability Toolkit Responses

Vienna:

Lubbock: