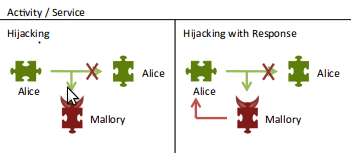

Intents to that `Activity`. Many `Intents` are meant to be public and others are exported as a side effect. Either way, at the very least, it is necessary to sanitize the input that an `Activity` receives. On the other side of the issue, if an app is trusting another app to provide a sensitive service for it, then malware can pose as the trusted app and receive sensitive data from the trusting app. An app does not need to request any permissions in order to set itself up as a receiver of Intents.

Android, of course, does provide some added protections for cases like this. For very sensitive situations, an Activity can be setup to only receive Intents from apps that meet certain criteria. Android permissions can restrict other apps from sending Intents to any given exported Activity. If a separate app wants to send an Intent to an Activity that has be set with a permission, then that app must include that permission in its manifest, thereby publishing that it is using that permission. This provides a good way publish an API for getting permission, but leaving it relatively open for other apps to use. Other kinds of controls can be based on two aspects of an app that the Android system enforces to remain the same: the package name and the signing key. If either of those change, then Android considers it a different app altogether. The strictest control is handled by the “protection level”, which can be set to only allow either the system or apps signed by the same key to send Intents to a given Activity. These security tools are useful in many situations, but leave lots of privacy-oriented use cases uncovered.

There are some situations that need more flexibility without opening things up entirely. The first simple example is provided by our app Pixelknot: it needs to send pictures through services that will not mess up the hidden data in the images. It has a trusted list of apps it will send to, based on apps that have proven to pass the images through unchanged. When the user goes to share the image from Pixelknot to an cloud storage app, the user will be prompted to choose from a list of installed apps that match the whitelist in Pixelknot. We could have implemented a permission and asked lots of app providers to implement it, but it seems a mammoth task to get lots of large companies like Dropbox and Google to include our specific permission.

There are other situations that require even tighter restrictions that are available. The first example here comes from our OpenPGP app for Android. Gnu Privacy Guard (GPG) provides cryptographic services to any app that requests it. When the app sends data to GPG to be encrypted, it needs to be sure that the data is actually going to GPG and not to some malware. For very sensitive situations, the Android-provided package name and signing key might not be enough to ensure that the correct app is receiving the unencrypted data. Many Android devices are still unpatched to protect against master key bugs, and for people using Android in China, Iran, etc. where the Play Store is not allowed, they don’t get the exploit scanning provided by Google. Telecoms around the world have proved to be bad at updating the software for the devices that they sell, leaving many security problems unfixed. Alternative Android app stores are a very popular way to get apps. So far, the ones that we have seen provide minimal security and no malware scanning. In China, Android is very popular, so this represents a lot of Android users.

Another potential use case revolves around a media reporting app that relies on other apps to provide images and video as part of regular reports. This could be something like a citizen journalist editing app or a human rights reporting app. The Guardian Project develops a handful of apps designed to create media in these situations: ObscuraCam, InformaCam, and an new secure camera app in the works that we are contributing to. We want InformaCam to work as a provider of verifiable media to any app. It generates a package of data that includes a cryptographic signature so that its authenticity can be verified. That means that the apps that transport the InformaCam data do not need to be trusted in order to guarantee the integrity of the uploaded InformaCam data. Therefore it does not make sense in this case for InformaCam to grant itself permissions to access other apps’ secured Activitys. It would add to the maintenance load of the app without furthering the goals of the InformaCam project. Luckily there are other ways to address that need.

The inverse of this situation is not true. The reporting app that gathers media and sends it to trusted destinations has higher requirements for validating the data it receives via Intents. If verifiable media is required, then this reporter app will want to only accept incoming media from InformaCam. Well-known human rights activists are often the target of custom malware designed to get information from their phones. For this example, a malware version of InformaCam could be designed to track all of the media that the user is sending to the human rights reporting app. To prevent this, the reporter app will want to only accept data from a list of trusted apps. When the user tries to feed media from the malware app to the reporting app, it would be rejected, alerting the user that something is amiss. If an reporting app wants to receive data only from InformaCam, it needs to have some checks setup to enforce that. The easiest way for the reporting app to implement those checks would be to add an Android permission to the receiving Activity. But that requires the sending app, in the example above that is InformaCam, to implement the reporting app’s permission. Using permissions works for tailored interactions. InformaCam aims to bring tighter secure to all relevant interactions, so we need a different approach. While InformaCam could include some specific permissions, the aim is to have a single method that supports all the desired interactions. Having a single method here means less code to audit, less complexity, and fewer places for security bugs.

We have started auditing the security of communication via Intents, while also working on various ideas to address the issues laid out so far. This will include laying out best-practices and defining gaps in the Android architecture. We plan on building the techniques that we find useful into reusable libraries to make it easy for others to also have more flexible and trusted interactions. When are the standard checks not enough? If the user has a malware version of an app that exploits master key bugs, then the signature on the app will be valid. If a check is based only on a package name, malware could use any given package name. Android enforces that only one app can be installed with a given package name, but if there are multiple apps with the same package name, Android will not prevent you from installing the malware version.

Intents from, the installed app is then compared to the pre-stored pinned value. This kind of pinning allows for checks like the Signature permission level but based on a key that the app developer can select and include in the app. The built-in Signature permissions are fixed on the signing key of the currently running app.

TOFU/POP means Trust-On-First-Use/Persistence Of Pseudonym. In this model, popularized by SSH, the user marks a given hash or signing key as trusted the first time they use the app, without extended checks about that apps validity. That mark then describes a “pseudonym” for that app, since there is no verification process, and that pseudonym is remembered for comparing in future interactions. One big advantage of TOFU/POP is that the user has control over which apps to trust, and that trust relationship is created at the moment the user takes an action to start using the app that needs to be trusted. That makes it much easier to manage than using Android permissions, which must be managed by the app’s developer. A disadvantage is that the initial trust is basically a guess, and that leaves open a method to get malware in there. The problem of installing good software, and avoiding malware, is outside of the scope of securing inter-app communication. Secure app installation is best handled by the process that is actually installing the software, like the Google Play Store or F-Droid does.

To build on the InformaCam example, in order to setup a trusted data flow between InformaCam and the reporting app, custom checks must be implemented on both the sender and the receiver. For the sender, InformaCam, it should be able to send to any app, but it should then remember the app that it is configured to send to and make sure its really only sending to that app. It would then use TOFU/POP with the hash as the data point. For the receiver, the reporting app, it should only accept incoming data from apps that it trusts. The receiver then includes a pin for the signing key, or if the app is being deployed to unupdated devices the pin can be based on the hash to work around master key exploits. From there on out, the receiving app checks against the stored app hashes or signing keys. For less security-sensitive situations, the received can rely on TOFU/POP on the first time that an app sends media.

There are various versions of these ideas floating around in various apps, and we have some in the works. We are working now to hammer out which of these ideas are the most useful, then we will be focusing our development efforts there. We would love to hear about any related effort or libraries that are out there. And we are also interested to hear about entirely different approaches than what has been outlined here.